Identity Security 2024: Mapping the Threats and Goals

The efficient management of identities and access has become central to digital business. It determines the speed and agility with which an organization is able to operate or pursue new goals; it underpins employee productivity and enables operational efficiencies; and it is key to security, privacy, and compliance. Most organizations have deployed identity and access management (IAM) solutions to handle their operational demands effectively.

However, the identity infrastructure and processes themselves are a frequent target of cyberattackers, driving recognition that identity security measures need to be improved.

What Are the Main Identity Threats?

IDC’s Global Identity Management Assessment Survey 2023 found that in Western Europe, the two categories of identity that are perceived as the biggest threats are hybrid or remote employees and partners, suppliers, or affiliates (each category mentioned by 49.6% of respondents). The external nature of these identities — from a location perspective, an employment perspective or both — increases the attack surface of the organization and creates potential vulnerability and exposure of data, systems, and processes.

Nevertheless, those roles also provide access to a broader talent pool and deliver operational efficiencies and economies of scale, allowing organizations to outsource non-core functions. Consequently, organizations are striving to accurately assess and manage the risk.

What Are the Top IAM Investments?

Accordingly, the top two service areas in which Western European organizations are planning to make significant IAM investments to address the security risk are identity management for roles and authorizations (56.9%) and privileged access management (PAM – 53.3%).

Note that since the onset of the COVID-19 pandemic in 2019, investments in PAM have been growing steadily, as organizations required greater control over remote employees accessing sensitive corporate applications and data.

Which IAM Areas Must Improve

The survey also asked which IAM areas organizations need to improve on significantly in the next 18 months. From a list of options including functional, operational, structural, and organizational aspects, the top responses were squarely in the area of identity security:

- The biggest share of organizations (45.1%) want to improve their ability to detect insider threats.

- A further 44.3% aim to improve identity threat detection and response (ITDR).

- 9% aim to improve integration with other IT security solutions.

The emergence of ITDR in the last couple of years as a key priority for organizations building out their security and identity capabilities has been a key takeaway of multiple IDC surveys now.

The final area to touch on is the “wish list” question, always a good barometer of what respondents really value. In this case, if your organization had the budget and resources to do so, what’s the one identity technology solution you’d add or strengthen in the next three months?

The top response was strong authentication, such as two-factor authentication or multifactor authentication (MFA), cited by 25.6%. This was followed by generative AI (GenAI) for fraud detection and identification of synthetic identities (20.3%) and, again, ITDR (19.5%).

The rapid maturing of deep fake tools and capabilities underlined by real-world examples of successful attacks is already driving demand for security tools to protect against them as the GenAI arms race heats up.

Identity really is at the heart of everything in the digital era: business, security, trust, compliance, risk management, operational efficiency, and more. It is fundamental to enterprise initiatives such as building cyber resilience or adopting zero trust principles.

Many direct references to IAM and identity security controls in the growing landscape of EU legislation further emphasize why identity should be high on every organization’s priority list. This new report maps many of the key trends shaping the European identity and access landscape in 2024.

OpenAI - Just the First Stage of the GenAI Rocket?

When NASA created its Apollo launch vehicles to take payloads to space (including humans), they were designed with multiple segments. The segment nearest the ground on launch (the “first stage”) contained huge rockets and fuel tanks that could get everything into the air and accelerate it to a velocity where it could escape Earth’s gravity. At this point, still some way before the edge of Earth’s atmosphere, the first stage would be jettisoned, to fall back to Earth. The rest of the vehicle would continue on its way, with escape velocity now reached.

A Frenzy of FOMO

OpenAI is the outfit that — above all others — is responsible for the rapid acceleration of interest and investment in generative AI (GenAI) technologies. The launch of ChatGPT in November 2022 kick-started a frenzy of FOMO, first for many individuals (after all, ChatGPT did surpass 1 million users in just five days) and then in businesses — as well as catalyzing conversations about intellectual property in the digital age, potential impacts of AI on employment and skills, and more.

Just over 12 months from the GenAI market launch created primarily by the attractiveness of OpenAI’s consumer services, IDC conducted a worldwide survey that demonstrated the incredible momentum behind the new technology within businesses: in January 2024, 68% of organizations already exploring or working with GenAI said it would have an impact on their business in 2024-2025, and an astounding 29% said that GenAI had already disrupted their business to some extent.

OpenAI continues to benefit from amazing levels of mindshare, thanks to the good old rule of “be first”, but also to the undeniable PR power of its CEO Sam Altman — not least within senior business leadership circles. But mindshare is not enough; it also benefits from a strategic partnership with Microsoft, which has seen Microsoft committing to provide $13 billion of investment, in return for an exclusive license to OpenAI’s IP and an agreement that it would be OpenAI’s exclusive cloud provider.

The heavily promoted downstream results of that partnership (Azure OpenAI Service, use of OpenAI models in CoPilots, and so on) have continued to create mindshare momentum.

And yet: OpenAI is not currently traveling along the route that businesses want to take.

OpenAI’s Alignment Problem

The outfit was founded as a not-for-profit research institute, focused on developing artificial general intelligence (AGI) — a currently hypothetical future level of capability that envisions AI systems that can perform as well or better than humans on a wide range of cognitive tasks — with a capped profit company subsidiary (which is the entity invested in by Microsoft and others).

However, when we ask organizations what they need from GenAI in order to create business value from the technology, they typically cite qualities such as accuracy, privacy, security and frugality. For example: 28% of organizations are concerned that GenAI jeopardizes control of data and intellectual property; 26% are concerned that GenAI use will expose them to brand or regulatory risks; and 19% of respondents are concerned about the accuracy or potential toxicity in the output of GenAI models.

OpenAI is innovating fast, but the dominant innovation focus is on breadth and depth of functionality (e.g., the introduction of “multimodal” models that can manipulate multiple content types, including text, images, sound, and video). Not on accuracy, privacy, security, frugality, and so on.

Currently, it is vendors “higher up the stack” (enterprise application and enterprise software platform vendors) who are attempting to bridge the gap with functionality aimed at addressing trust issues and minimizing risks. But it is clear that foundation model providers also need to bear some responsibility for… being responsible.

Beyond OpenAI: An Explosion of GenAI Model Providers

OpenAI might have amazing mindshare right now, but it is already far from the only source of GenAI model innovation. Fueled by venture capital and corporate investment, competitors have flooded into the space, including:

- GenAI research-focused vendors like Anthropic, AI21, and Cohere

- Hyperscale public cloud providers AWS and Google

- Enterprise technology platform vendors including IBM, Oracle, ServiceNow, and Adobe

- Sovereignty-focused providers, including Mistral, Aleph Alpha, Qwen, and Yi

- Industry-specialized providers, including Harvey (insurance) and OpenEvidence (medicine)

- A vibrant and fast-growing open-source model community, with thousands of GenAI-related projects hosted by Hugging Face and GitHub

Open-source communities are a particularly energetic vector of innovation: open-source projects are quickly evolving model capabilities in terms of model size and efficiency, training and inferencing cost, explainability, and more.

Microsoft Is Clearly Looking Beyond OpenAI

In late February, Microsoft President Brad Smith published a blog post announcing Microsoft’s new “AI Access Principles”.

There’s a lot of detail in the post, but underpinning it all is a clear direction: in order to reinforce its credentials as a “good actor” in the technology industry and minimize the risks of interventions by industry regulators around the world, Microsoft is committing to support an open AI (no pun intended) ecosystem across the full AI technology stack (from datacenter power and connectivity and infrastructure hardware to services for developers). As part of this, it is increasingly emphasizing the importance of a variety of different model providers. For instance, it’s made a recent small investment in France’s Mistral AI and is expanding support for models from providers like Cohere, Meta, NVIDIA, and Hugging Face in its platform.

Will OpenAI Fly or Crash?

In order for OpenAI to reap significant rewards from business demand for GenAI technology implementation, it is going to have to evolve its approach. While the initial success of ChatGPT captured market attention, the rapidly evolving landscape of both GenAI technology supply and demand requires a stronger business focus. OpenAI is faced with tension between its research-oriented ethos and the market’s demand for practical AI applications. This alignment problem raises questions about its identity and future strategy.

Lastly — what about Microsoft? It must back its new principles with tangible actions that genuinely advance AI responsibly. It needs to ensure transparency and avoid actions that would suggest it only uses “responsible AI” as a PR tool for driving profits. It needs to promote both innovation and competition. Nobody wants a world where one model’s dominance could stifle competition and limit options for developers.

Hence, fostering an open and inclusive ecosystem where smaller players can grow will be imperative for Microsoft’s credibility and allow for a trustworthy AI ecosystem benefiting everyone.

Want to know more? Join IDC’s experts on the 19th of the March from across EMEA for an exclusive peek into our latest research to:

- Uncover real-world use cases from organizations aiming to maximize positive impact of GenAI on their business,

- Learn about evolving GenAI technology, supplier dynamics, and the shifting regulatory landscape,

- Gain actionable insights to reveal a roadmap to get through GenAI possibilities and challenges in 2024 and beyond.

Register for the webcast here: How EMEA Organizations Will Deliver Business Impact With GenAI – Beyond the Hype.

Is Government Ready for AI?

Governments across Europe, the Middle East, Africa (EMEA) and beyond are busy experimenting with and scaling AI and GenAI (generative artificial intelligence) use cases. The French and U.K. central governments’ GenAI-powered virtual assistant projects — in one case targeted at civil servants and the other at citizen chatbots — show the high level of interest and the early stages of maturity. Also in France, a large language model (LLM) is being introduced to improve the processing of legislative proceedings.

According to IDC EMEA’s 2023 Cross-Industry Survey, the government sector currently has the second-lowest level of adoption of GenAI in comparison to other industries (ahead of only agriculture). But the government sector has the highest percentage of organizations that plan to start investing in it over the next 24 months. Some government entities are taking a more cautious approach, putting restrictions on the use of commercial GenAI platforms, while considering developing their own LLMs.

This phenomenon is not new in the public sector. For several reasons, governments usually have a slower rate of adoption of new technologies.

One is that the public sector is obligated to guarantee access to their services to everyone. Government bodies thus require longer to test innovative technologies in order to deliver inclusive outcomes. Legal requirements can also constrain technology procurement, as can limited capacity and competencies.

The current AI investments are all critical steps toward realizing the benefits of data and AI in government — but they are not sufficient. Beyond operational use cases like virtual assistants, summarizing council meetings, expediting code development and testing for software applications, flagging risks of fraud in procurement and tax collection, and drafting job requisitions, governments need to think of the long-term impacts of AI and GenAI.

They need to think of what will happen when AI is used pervasively across industries and is widely accessible by individuals on their smartphones — when the potential benefits and risks of AI will impact government operations well beyond the current stage of maturity and affect the government’s role in society.

The Potential Impact of AI and GenAI on Future Government Operations and Policy

AI has been used in government — particularly by tax, welfare, public safety, intelligence, and defense agencies — for more than a decade. But the advent of GenAI indicates that existing AI applications only scratch the surface of what’s possible.

Government Operations

From a government operations perspective, AI- and GenAI-powered chatbots are just the beginning. European and United Arab Emirates government officials that we recently spoke with are already thinking about how the next generation of virtual assistants could entirely replace government online forms and portals.

For example, a natural language processing algorithm trained to recognize languages, dialects, and tones of voice could enable citizens to apply for welfare programs, farming grants, business licenses, and more just by sending voice messages.

An AI-powered system combining an automatic speech recognition system and an LLM model would comb through voice messages to identify the entity (individual or business) making the request and the key attributes, then feed the data to an eligibility verification engine. No forms would need to be filled in manually.

This scenario is not too far off. A regional government we spoke with is already collecting voice samples to test such a system for farming grant applications.

But multiple questions are raised. Legal and technical questions like: How and where should voice data be collected and stored to comply with GDPR? How can a citizen’s or business owner’s identity be verified through a voice message in compliance with GDPR and eIDAS? How can the government remain transparent and accountable for its decisions if there is not even a digital front end?

It also raises business and operational questions like: Will such a system really replace online forms — or instead become an additional channel that segments of the population use, thus pushing the volume of requests to a level that causes delays in government responses? Will the pervasive use of GenAI in the private sector multiply that volume effect?

Will lawyers’ pervasive use of GenAI incentivize them to file more proceedings, even ones they do not expect to win, because it is so easy that they may as well try? How will government business, legal, operational, technical, and functional capabilities evolve to cope with these challenges?

Policy

From a policy perspective, the spectrum of open questions is expanding by the day. One of the most critical questions, and one that many are thankfully already asking, is about the impact of AI-powered automation on the job market.

If workers are displaced by AI-powered automation, there is no silver bullet. Training programs are not fast enough and may not work for everybody.

Universal basic income can be part of the recipe. But how much is affordable and what is the right level of income? Will the government need to consider employing more people to cushion a drop in employment in other industries?

If so, are roles requiring both expertise and empathic interactions, such as education, healthcare, and social care, the right public sector domains to do so? If new jobs appear on the market, how does that impact worker social protection policies?

In a year when half of the global population will be asked to cast a vote, the impact of AI on democracy is also called into question. AI is already generating a surge in misinformation and increasing risks of polarized political positions.

What if the attempt of mainstream media to protect copyrights from web crawlers used to feed LLMs unintentionally opens the door for bad actors to make even more misinformation available to train GenAI? Does the government need to establish counter-misinformation authorities or issue laws and guidelines that hold the private sector accountable to do so?

If a government authority is established, how can it ensure public oversight and independence from the already existing cyberunits of defense and intelligence departments, which have a different mission? In France, a recent debate over media independence and balanced journalism might be settled by AI analyzing speeches, attendees, and ensuring pluralism. But who would train a democratic judge of pluralism?

What about the government’s ability to regulate private markets? What if AI and GenAI accelerate medical science through analysis of vast amounts of real-world health data that have been historically hard to collect and prepare for algorithm training? What if, for example, such an acceleration in medical sciences finds a cure that diabetics can use to treat their disease once and for all, instead of having to take medication for the rest of their lives? What would be the impact on the revenue model of pharma companies? Will governments have to change intellectual property rights entirely, to make sure that pharma companies invest in such treatments and make them affordable to all diabetics people around the world?

The same goes for cultural companies and intellectual properties. What would be the role of governments to ensure that culture workers can continue to participate in the entertainment industry and in the creativity and identity of a country through their art?

Finally, what are the ethical implications of using AI in warfare? There are already systems that can alert snipers of targets. What is their impact on the rules of engagement on the battlefield and on the accountability of the individual soldier and the chain of command?

These are big questions that require technology, legal, policy, ethical, and process experts to come together. They cannot be left to the chief information officer or the chief data officer. And they require civil service and policymaking leaders to engage openly with the public, with academic and private sector experts, to avoid the risks of being influenced (or perceived being influenced) only by lobbyists. They require international collaboration. They require measuring the value of AI not just in terms of productivity, but also in terms of fairness, robustness, responsibility, and social value.

HEDT Revival: AMD Ryzen Threadripper 7980X Processor Review, Featuring the AMD Radeon PRO W7700 Professional Graphics Card

On October 19th, 2023, AMD announced new processors for the workstation and high-end desktop (HEDT) markets. The processors are based on 5nm Zen 4 architecture and offer up to 96 cores and 192 threads of performance.

The Ryzen Threadripper PRO 7000WX series of processors, which are designed for professionals and businesses that demand top-tier performance, reliability, expandability, and security, feature AMD PRO technologies and eight channels of DDR5 memory.

Meanwhile, the Ryzen Threadripper 7000 series signals AMD’s return to the HEDT market, offering overclocking capabilities and the maximum clock rates possible on a Threadripper-based CPU. Power, performance, and efficiency are all made possible by 5nm technology and Zen 4 architecture. The Threadripper 7000 series provides ample I/O channels for desktop users, with up to 48 PCIe Gen 5.0 lanes for graphics, storage, and more.

The new processors were available from OEM and system integrator (SI) partners, including Dell Technologies, HP, and Lenovo, as well as do-it-yourself (DIY) retailers, from November 21st, 2023.

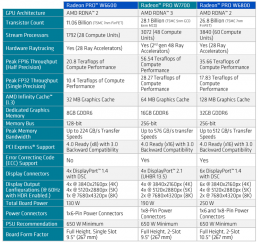

On November 13th, 2023, AMD announced the Radeon PRO W7700, a new workstation graphics card that offers high performance, reliability, and top-notch price/performance ratios for professional applications. The new card bridges the gap between the high-end Radeon PRO W7800 (32GB GDDR6) and the entry-level Radeon PRO W7600 (8GB GDDR6). The 16GB VRAM graphics card supports DisplayPort 2.1, AI acceleration, and hardware-based codecs for video editing and production.

This review will focus on the AMD Ryzen Threadripper 7980X processor, with additional coverage of the AMD Radeon PRO W7700 professional graphics card.

Test System Details

AMD Ryzen Threadripper 7980X Processor

The AMD Ryzen Threadripper 7980X processor (non-pro) signals AMD’s return to the HEDT market, offering overclocking capabilities and the maximum clock rates possible on a Threadripper series CPU.

Power, performance, and efficiency are all made possible by 5nm technology and Zen 4 architecture, which are available for the DIY market and SI partners. The Threadripper 7000 series provides ample I/O channels for desktop users, with up to 48 PCIe Gen 5.0 lanes for graphics, storage, and more.

AMD Radeon PRO W7700

With 16GB of Error Correction code (ECC) memory, the AMD Radeon PRO W7700 easily handles data-intensive operations. In terms of visual fidelity, the card features the New Radiance Display Engine, which supports 12-bit high dynamic range (HDR) color and recreates over 68 billion unique colors with high precision.

The Radeon PRO W7700 GPU’s major feature is its 48 unified RDNA 3 compute units, 48 second-generation ray accelerators, and 96 Al accelerators. The card has 16GB of GDDR6 ECC memory and four DisplayPort 2.1 (UHBR 13.5) connectors. The connectors, which provide up to 52.2 Gbit/s total bandwidth, are designed for 10K displays with 60Hz refresh rates, 2x8K displays, or 4x4K displays with Display Stream Compression technology.

AMD’s new dual media engine offers hardware-accelerated support for AV1 encoding, with the Radeon PRO W7700 capable of delivering 7680×4320 video at 60fps (8K60). The media engine supports two AVC and HEVC streams that can be encoded or decoded simultaneously. For live broadcasters, AMD has included many capabilities that increase both performance and quality.

Memory and Motherboard

We installed the Ryzen Threadripper 7980X processor on a Gigabyte TRX50 AERO D motherboard, alongside the G.SKILL Zeta R5 Neo DDR5-6400, CL32-39-39-102, 1.40V, 128GB (4x32GB) kit with AMD EXPO memory overclocking and ECC support enabled.

AMD Ryzen Threadripper CPUs only support DDR5, LRDIMM, and 3DS RDIMMs. Threadripper 7000 processors can handle up to 8 channels/2TB on PRO motherboards (based on 8x256GB DIMMs) and up to 4 channels/1TB on HEDT motherboards (based on 4x256GB DIMMs), with support for both single-rank and dual-rank at 5200Mhz and a single DIMM per channel. ECC is enabled, although its functioning varies depending on the motherboard. The maximum official transfer rate varies by DIMM configuration, like with other AMD Ryzen CPUs.

Other Components

The Windows 11 main storage device was a 1TB GIGABYTE AORUS NVMe Gen4 solid-state drive. AMD provided a 360 all-in-one water cooler; however, it did not completely cover the CPU surface. Instead, we used the Arctic Freezer 4U-M, an 8x6mm direct contact heatpipe tower cooler with 2x120mm fans in push/pull mode. This cooler is intended for the most powerful server and workstation CPUs with up to 96 cores and a thermal design power of up to 350W.

The be quiet! STRAIGHT POWER 11 Platinum 850W power supply powered the system. A 34″ Dell Gaming S3422DWG monitor — a Quad-HD 3440×1440 display with a 144Hz refresh rate, FreeSync, 10-bit colors, and HDR support — was also utilized.

Benchmarks

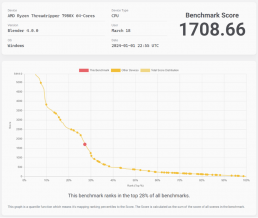

Blender Benchmark

Blender Benchmark version 4.0.0 was used to assess the AMD Ryzen Threadripper 7980X processor’s rendering performance. With a score of 1708.66, the processor’s performance ranked among the top 28% of benchmarks running the same workloads. Given the inclusion of GPU results, the CPU performed brilliantly.

In terms of GPU results, the AMD Radeon PRO W7700 ranked in the top 27% of benchmarks, with a slightly elevated score of 1883.80. This reflects how strong the processor is at GPU-level rendering, which is fantastic news for studios that rely on CPUs for production.

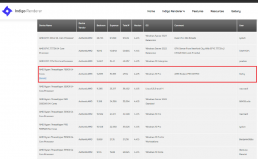

IndigoBench

IndigoBench v4.4.15 is another standalone benchmark based on Indigo 4’s rendering engine and the industry-standard OpenCL.

With a total score of 47.54 million samples per second, the Threadripper 7980X ranks fourth among the top CPU performances when using normal settings and no overclocking. The processor also outperforms the Threadripper 3990X and Pro 5995WX by 30% and 33%, respectively, demonstrating a significant generational jump.

PCMark 10

PCMark 10 is a comprehensive benchmarking tool that covers the wide variety of tasks performed in the modern workplace. Web browsing, videoconferencing, spreadsheet and word processing, photo and video editing, and rendering and visualization are some of the tasks tested by the tool.

The 8,772 score the test platform achieved was better than 98% of all results produced by PCMark 10.

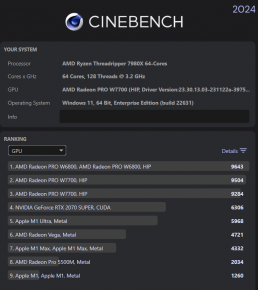

CINEBENCH

The 2024 edition of Cinebench now includes a GPU benchmark that takes advantage of Redshift, Cinema 4D’s default rendering engine. The Radeon PRO W7700 scored 9,504, nearly matching the Radeon Pro W6800, which scored 9,643 (according to the test database). This result demonstrates the level of sophistication of RDNA 3 computation, given the Radeon Pro W7700 has half the infinity cache and dedicated graphics RAM of the W6800.

Based on the 92,817 Cinebench R23 result, the AMD Ryzen Threadripper 7980X CPU is nearly three times faster than the Ryzen 9 7950X. This result demonstrates that the Threadripper is in a class of its own and is a much-needed high-performance solution.

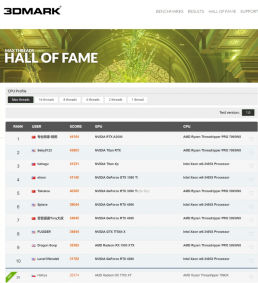

3DMark CPU Profile

This test stresses the CPU at various levels of threading while reducing the GPU burden, ensuring that GPU performance is not a limiting factor. It takes advantage of sophisticated CPU instructions sets supported by different processors, including Advanced Vector Extensions 2 (AVX2). It also leverages the straightforward, highly efficient simulations provided by the SSSE3 code path.

With standard settings and no overclocking, the AMD Ryzen Threadripper 7980X CPU score of 25,374 qualifies for 3DMARK’s MAX Threads Hall of Fame. It ranks among the top 100 benchmark scores ever recorded, and holds 25th place among the world’s most skilled overclockers.

V-Ray 6 Benchmark

The V-Ray Benchmark, which uses the V-Ray 6 render engines, was used to gauge the system’s rendering speed.

With a vsamples score of 120,247, the AMD Ryzen Threadripper 7980X CPU is nearly twice as fast as the Threadripper Pro 599XWX and 3990X, representing a considerable generational leap.

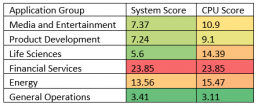

SPECworkstation

The SPECworkstation 3.1 Benchmark fully assesses workstation performance across a variety of professional applications.

The AMD Ryzen Threadripper 7980X CPU scores are higher across all application groups, except for apps that rely more on the processor (such as financial services). This exception is due to the use of the Radeon PRO W7700, a midrange professional graphics card. Higher results across all application groups could be achieved with the use of the Radeon Pro W7800 or W7900.

Gaming

Since many professional gamers and streamers utilized HEDTs in the past to support multitasking — playing games, encoding and recording gameplay, and streaming to several web platforms — the Threadripper’s gaming performance was evaluated on this professional test platform. Professionals that enjoy playing games would undoubtedly prefer not to invest in another gaming PC after paying a premium for this test platform.

Shadow of the Tomb Raider ran at an average 61 frames per second (fps) at 1440p, with a minimum of 42fps. The highest graphical settings, as well as AMD’s FidelityFX CAS package, were enabled. Surprisingly, the use of XeSS for upscaling while running the game test boosted performance by 10% at the same settings, achieving a minimum of 50fps and an average of 66fps. This might be a demonstration of the RDNA3 architecture’s AI acceleration capabilities and the Radeon Pro W7700’s AI accelerators.

Far Cry 6 ran at an average 104fps at 1440p, registering a minimum of 92fps. All DirectX Raytracing (DXR) and FidelityFX Super Resolution (FSR) features were enabled during testing.

Cyberpunk 2077 ran at an average 36fps at 1440p, registering a minimum of 28fps. Ultra-ray tracing presets and FSR 2.1 features were automatically enabled.

The fact that the gaming results were 100% GPU bound indicates that the CPU was never a bottleneck and that employing top-tier gaming cards can improve gaming performance.

IDC Opinion and Conclusion

When AMD announced the Threadripper 5000 series in the Pro-only category, primarily for OEMs, the enthusiast community was left feeling let down. However, we are pleased that AMD did not abandon those customers for too long. AMD brought this category back to life after realizing — as its competitor had already done — that this is a prestigious and necessary niche market that cannot be satisfied by high-end consumer CPUs.

We are also pleased to see that the HEDT refreshment with the Ryzen 7000 platform supports the newest and greatest in networking and connectivity with excellent I/O support, including PCIe5 and DDR5 ECC registered memory modules (RDIMM/RDIMM-3DS), in addition to USB4 Type-C, 10 gigabit ethernet (10GbE), and Wi-Fi 7.

In the past, it was impossible to reach extremely high speeds while remaining stable and controlling voltage and temperature. However, this CPU is so quick, snappy, and opportunistic as it can surge up to 5.1 GHz when just a few cores are on demand, and 4.1 to 4.7 GHz when all cores are stressed, which is incredible.

Furthermore, attaining rates of up to 6400MHz is another productivity breakthrough as it was previously difficult to overclock ECC RAM above the norm.

Aside from its intense performance, efficiency is the most striking aspect of the processor. Under full load, the Threadripper 7980X’s power consumption did not go over 340W. High-end consumer CPUs with fewer cores use the same amount of energy.

Although the Radeon Pro W7700’s power output stayed under 140W, we were not as satisfied with its clock speed, and thought there was potential for a higher frequency that was purposefully regulated. With our 850W platinum power supply, we had no trouble operating the system overall, and were even able to install it in a midi tower case.

We would love to see more partner solutions for cooling to fully cover the processor’s integrated heat spreader as well as motherboard support for extreme high-end use cases that require up to seven or eight graphics cards. The Threadripper 7000 series is more than capable of handling booming AI, machine learning, and training solutions — as well as media production and automotive rendering workloads — when needed on desktop platforms.

AMD should consider a SI certification scheme, similar to AMD Advantage in gaming. By doing so, it can provide customers with reliable and better experiences on an all-AMD platform that features the Threadripper and the Radeon PRO. This strategy will strengthen trust in the AMD brand and help SIs compete against OEMs with ISV-approved devices.

In conclusion, the AMD Ryzen Threadripper 7980X reigns supreme among HEDT CPUs. It delivers great performance straight out of the box, with most cores running at the highest clock speeds in a very energy efficient manner.