8 Future of Work Trends for 2024 and Beyond

End Users Tell IDC About the Trends

Changes are occurring in the work environment that can no longer be ignored or dismissed with superficial comments like, “This is how things are evolving, so you need to accept them.”

In this day and age, the full employee experience package must be nurtured. Sharp attention must be paid to the demands of younger employees entering the work environment.

The statements above are some of the thought-provoking perspectives that technology end users voiced to IDC during deep-dive discussions at IDC’s Future of Work and AI Summit in London and our Future of Work Summit in Milan. During these events, both of which occurred in March, IDC held free-ranging conversations with more than 100 Italy- and U.K.-based IT and HR experts who work in industries including education, manufacturing, finance, and healthcare.

The talks revealed 8 Future of Work trends that are likely to impact workspaces in 2024 and beyond.

- Using Tech to Boost Productivity and User Experience in Hybrid Workspaces: The experts IDC spoke to supported greater technology adoption, including of intuitive technologies, to unlock productivity improvements and help employees close digital skills gaps. They emphasized the need for workplace cultural change, including clear communication to employees on the benefits of new technologies. The experts noted that hybrid working models will require organizations to redesign office spaces to enable digital parity between remote and onsite workers.

- Assessing AI’s Impact on the Workforce: The experts were generally of the view that AI and automation will make a positive impact on processes, employee productivity, and innovation. Organizations should make upskilling a priority, as new skills will be required to advance these technologies. Attention must also be paid to the EU’s new Artificial Intelligence Act, which demands greater transparency and traceability of AI initiatives, as well as contains requirements around removing bias that could be fed into large language models (LLMs).

- Ensuring Cybersecurity in Flexible Work Environments: Cybersecurity remains critical, especially for organizations that employ remote workers and/or employees who split time between working at the office and at home. IDC’s discussions pointed to the need to deploy multiple layers of safeguards, such as cryptography and virtual desktops, to safeguard data and assets connected to the organization’s networks. Regardless of their location (i.e., home or office), workers must be continually trained on cybersecurity and on how to protect IT and OT data in converged environments.

- Leveraging Data, Automation, and Innovation to Build Intelligent HR: When applications are being created, employees in different functions may not have the same understanding of the processes that need to be designed. A pivotal initial step to ensure user adoption is to make certain that all involved share the same understanding of goals and processes. The IT function, for example, should not spend time developing solutions that will not ultimately serve user needs efficiently and effectively. A complicating factor is that many organizations are still stuck with legacy solutions that hinder technological advancement. Governance is another challenge. Many organizations are struggling to develop and implement processes that guarantee clean and ready data for use in AI and GenAI applications.

- Fine-Tuning Hybrid and Flexible Work Models: Hybrid and flexible models require a high level of employer trust in workers’ ability to be productive if not in the office. Some of the experts IDC spoke to indicated that many in Italian senior management remain skeptical about the benefits of work-from-home policies and continue to demand that their workforces return to the office. On the workforce side, there is growing demand for objectives and detailed KPIs. In general, the experts regard hybrid and flexible working models to be at least as productive as office-only models — in some cases more so. Flexible working models can be critical to help ensure employee engagement, especially for those who are caregivers, a parent, or members of the younger generation.

- Boosting Employee Engagement and Retention: Companies can utilize multiple levers to improve employee engagement and retention. These include fostering in-office/in-person connections, team building, and providing clear and continuous feedback to employees from the top to the bottom of the organization. The role of technologies in such initiatives is pivotal. Employees, for example, are usually happier and more engaged if they are satisfied with the technologies used in their workplace. The experts at our meetings also told us that the expectations of the incoming generation of workers are driving organizations to reshuffle their employee engagement priorities and requirements.

- Connecting the Future of Work and Sustainability: Organizations in the U.K., Ireland, and Italy are increasingly responsive to environmental, social, and governance (ESG) priorities. Much effort and resources are being invested in the “E” component as companies act to shrink their carbon footprints, for example, by shifting to more carbon-neutral cloud solutions. Initiatives connected to the “S” component are raising organizational awareness of issues like gender parity, inclusion, digital accessibility, and community commitment. “G” components focus on the R&D and implementation of technologies to collect and analyze reporting data. To meet their ESG commitments efficiently, companies are seeking to onboard sustainability experts across all organizational levels.

- Analyzing How Skills and Talent Are Evolving: Organizations continue to struggle to find employees with the skills to help the company stay abreast of new technology and innovations. On one hand, we see AI boosting productivity and making some tasks and jobs obsolete. On the other, there is rising demand for humans with the “hard” technical skills to effectively manage AI and connect AI with humans. Demand is also rising for humans who possess the “soft” skills to manage the creativity and needs of human employees. Employees who can effectively fulfill these roles will be highly valued and rewarded.

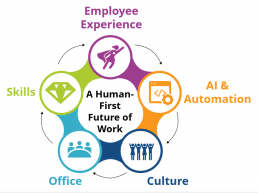

Many of the above points are succinctly summarized in IDC’s Human-First Future of Work Framework, which is based on five pillars that are essential for any business seeking to build a sustainable, human-first work environment.

Interested in a deeper understanding of the issues discussed here? Contact IDC’s Future of Work Team or connect with us on LinkedIn for live updates from the EMEA Xchange Summit in Malaga on April 15–16, 2024.

A Glimpse into the Future for European Digital Natives

Digital-native businesses’ (DNBs) deal-making, valuations, and exit activities were all down in 2023 in the European venture market, according to Atomico’s The State of European Tech 2023. A market return to form that, however, can be considered a worldwide phenomenon.

The key fundamentals that led to a downturn in the funding environment in the last two years are still in place. Limited partners are still cautious about providing more money to the venture capital (VC) ecosystem, due to persisting macroeconomic and geopolitical uncertainties. With difficulties continuing in the funding environment, the number of exits is expected to remain limited in the short term, in favor of M&A and consolidation.

With all this as a backdrop, what will 2024 look like for European DNBs?

From AI to Sustainability Technologies: Where Is the Money?

European venture capitals hold a consistent amount of dry powder due to this lack of activity, which could be invested in selected deals this year. A 2024 rebound is expected in the event of a cut in interest rates, which could lower risk perception from limited partners. If only 10 new unicorns (privately owned companies with valuation above $1 billion) were created in Europe in 2023, down from 46 in 2022, with an upturn in deal-making activities we expect a larger number of DNBs to join the unicorn cohort.

European Artificial intelligence DNBs are expected to be at the forefront of investors’ interest again in 2024. As focus on deals from VCs and corporate VCs in 2023 was on large language models (LLMs), deals will most probably shift toward AI vertical applications. With regulations such as the EU AI Act coming into effect, investment will also shift toward start-ups and scale-ups focused on AI security and privacy.

Sustainability technology DNBs, from carbontech to climatetech, dominated capital flows in 2023, and the segment is expected to attract more capital in 2024 too, with climate change a key topic on European (and worldwide) leaders’ agendas, as demonstrated by the outcomes of COP23. Furthermore, tech start-ups growth in Europe is also sustained by national and EU stimulus funds, such as the European Innovation Council (EIC) work programme 2024, which allocates €1.2 billion for strategic technologies and scaling up companies in deep tech innovations, from spacetech to quantum technologies.

How Will External Conditions Shape European DNBs’ Technology Investments?

Uncertain market conditions push digital natives to reprioritize their tech spending toward optimizing processes and increasing profitability, but tech expenditure will not be cut, as it is essential to sustain their digital-based business models. More specifically, security technologies and cloud platforms are pivotal investments to develop secure and scalable digital products and services, whereas increased focus on AI and automation technologies is set to make larger DNBs leaner and more cost effective. Data infrastructure, integration, and quality investments would be still pivotal to boost wider AI adoption, targeting customer experience initiatives as well, with the aim to retain and enlarge the existing customer base.

Want to know more? You can find these and other key trends driving the European DNB landscape, in IDC’s 2024 Digital-Native Business Trends or by getting directly in touch at mlongo@idc.com.

The State of Implementation of Generative AI in Manufacturing

San Francisco-based OpenAI’s introduction of ChatGPT on November 30, 2022, marked a significant milestone in the development of large language models (LLMs) and generative AI (GenAI) technology. The launch by OpenAI, the creator of the initial GPT series, sparked a race among technology vendors, system providers, consultants, and app builders. These entities immediately recognized the potential of ChatGPT and similar models to revolutionize industry.

2023 saw a surge in efforts to develop GenAI tools that are smarter, more powerful, and less prone to hallucinations. The competition led to an influx of innovative ideas and tools aimed at harnessing the capabilities of LLMs. The goal became to leverage these models as ultimate tools to enhance productivity, competitiveness, and customer experience across diverse sectors.

With ChatGPT paving the way, a broad range of organizations and professionals are exploring how to integrate GenAI into workflows and solutions. The widespread interest and investment have underscored the technology’s transformative potential and laid the groundwork for its continued evolution in the years to come.

4 Uses Cases for GenAI in Manufacturing

In manufacturing organizations, the utilization of GenAI-powered tools and solutions is primarily focused on four key areas:

- Content Generation: This includes automated report generation, in which GenAI algorithms are employed to automatically generate reports based on predefined parameters and data inputs.

- User Interface Enhancement: This involves the integration of chatbots into user interfaces, enabling more intuitive and interactive communication between users and systems.

- Knowledge Management: GenAI facilitates knowledge management by providing co-pilot services that help users access and interpret vast amounts of data and information.

- Software and Delivery: This encompasses various applications, such as code generation, in which GenAI is leveraged to automate the creation of software code, streamlining development processes.

According to IDC’s GenAI ARC Survey of 2023, manufacturing organizations are actively evaluating or implementing GenAI solutions.

Around 30% of European respondents have already invested significantly in GenAI, with spending plans established for training, acquiring Gen AI-enhanced software, and consulting. Nearly 20% are doing some initial testing of models and focused proofs of concept, but don’t yet have a spending plan in place.

These results suggest steady growth in the adoption of GenAI-powered tools and solutions within the manufacturing sector. The initial hype surrounding GenAI in 2023, fueled by its perceived potential as a “wonder technology,” has evolved into a pragmatic recognition of its capacity to address ongoing challenges such as workforce shortages, skills gaps, language barriers, data complexity, regulatory compliance, and more.

In the manufacturing industry, GenAI is increasingly viewed as an enabling technology capable of facilitating innovation and overcoming barriers to success.

Framework for Manufacturing Organizations to Implement GenAI

To fully capitalize on the potential of GenAI pilots, manufacturing organizations recognize the need for comprehensive frameworks that encompass processes and policies. Key measures include:

- Data Sharing and Operations Practices: Organizations should prioritize the implementation of practices that ensure data integrity for LLMs developed internally or in collaboration with third parties. This ensures that data used in GenAI models is accurate, reliable, and ethically sourced.

- Corporate-Wide Guidelines for Transparency: Guidelines should be established to evaluate transparency and track the use of GenAI code, data, and trained models throughout the organization. This promotes accountability in GenAI usage.

- Mandatory GenAI Awareness and Acceptable Use Training Programs: Mandatory training programs should be implemented to raise awareness of GenAI capabilities and ethical considerations among designated workforce groups. This helps ensure that employees understand how to responsibly utilize GenAI technologies.

As excitement over the capabilities of GenAI has died down, organizations are becoming increasingly aware of the risks posed by potential intellectual property theft and privacy threats linked to the technology.

To address these concerns, many organizations are prioritizing the establishment or expansion of formal AI governance/ethics/risks councils tasked with overseeing the ethical use of GenAI and mitigating risks associated with privacy, manipulation, bias, security, and transparency.

As a manufacturing interviewee in one of my studies put it, “The governance framework is indispensable in ensuring responsible and ethical AI implementation.” This underscores the importance of implementing robust governance measures to ensure the ethical use of GenAI within manufacturing organizations.

Deployment Strategies

Strategies for selecting the right solution for the right use case can vary substantially. A global white goods company, for example, piloted several GenAI-powered use cases in 2023. Its selection and deployment strategy encompassed a range of approaches, including:

- Off-the-Shelf Solutions: The company utilized ready-to-use, commercially available GenAI-embedded software-as-a-service solutions. These offered immediate access to GenAI capabilities without the need for extensive development or customization.

- AI Assistants: It deployed AI assistants to support specific tasks within their business processes. These assistants helped, for example, to create designs based on predetermined workflows, providing valuable support and efficiency gains.

- AI Agents: The company deployed AI agents in complex use cases requiring the orchestration of workflows and decision-making based on AI-driven insights. The agents leveraged GenAI to analyze data and make informed decisions autonomously.

A primary challenge often mentioned in such endeavors is selecting the optimal LLM for company-specific use cases from a multitude of possibilities. With new models and solutions constantly emerging and becoming accessible, this task can be daunting. The selection process typically involves thorough market research, vendor presentations, and internal discussions about the technology framework underlying current and future use cases.

However, the success of GenAI ultimately hinges on the quality and quantity of the data utilized. Curating a diverse and sufficient data set is critical to ensuring unbiased outcomes and maximizing the effectiveness of GenAI solutions. Data curation therefore remains a cornerstone of success in leveraging GenAI technologies.

The Bottom Line

GenAI-powered technology holds immense potential across industries and regions, offering capabilities that traditional machine learning algorithms or neural networks may struggle to match in terms of breadth and depth. GenAI can assist in co-piloting humans, thereby addressing challenges associated with an aging and/or unqualified workforce.

However, organizations must prioritize addressing concerns such as data leakage, biases, and maintaining sovereignty over IT processes running in the background. These issues must be carefully managed to ensure the responsible and ethical implementation of this powerful technology.

Dilemmas for Software Vendors when Embedding Generative AI into Applications

The past year and a half has demonstrated the impressive capabilities of generative AI (GenAI) systems, such as ChatGPT, Bard, and Gemini. Business application vendors have since begun a sprint to include the most recently enabled capabilities (summarizing, drafting text, natural language conversation, etc.) into their products. And organizations across industries have started to deploy generative AI to help serve customers — hoping that GenAI-powered chatbots could provide a better customer experience than the failed and largely useless service chatbots of the past.

The results have started to come out, and they are mixed. The service chatbots of organizations such as Air Canada and DPD have made unsubstantiated offers or even rogue poetry. Another customer chatbot for a Nordic insurance company was not updated with the latest website reorganization and kept sending customers to outdated and decommissioned web pages.

The popular Microsoft Copilot hallucinated about recent events and invented occurrences that never happened. Based upon personal experience, a customer meeting summary written by generative AI included a final evaluation of the meeting as “largely unproductive due to technical difficulties and unclear statements” — an assessment not echoed by the human participants.

These issues highlight several dilemmas related to using generative AI in software applications:

- Autonomous AI functions versus human-supervised AI. Autonomous AI is attractive to customer service departments because of the cost difference between a chatbot and a human customer service agent. This cost saving potential must, however, be balanced against the risk of reputational damage and negative customer experiences as a result of chatbot failures and mishaps.

Instead, designing solutions with “human in the loop” may have multiple benefits. Incorporating employee oversight to guide, validate or enhance performance of AI systems may not only drive outputs accuracy, but also increase adoption of GenAI solutions. For example, a customer service agent could have a range of tools, such as automatically drafted chat and email responses, intelligent knowledge bases, and summarization tools that augment productivity without replacing the human.

- At what point is company-specific training enough? In other words, extensive training investments into company-specific large language models (LLMs) versus relying on out-of-the-box LLMs, such as ChatGPT, for good-enough answers. In some of the generative AI failures described above, it seems that the company-specific training of the AI engine was too superficial and did not cover enough interaction scenarios.

As a result, the AI engine resorted to its foundational LLM, such as GPT or PaLM, and these did, in some cases, act in unexpected and undesired ways. Organizations are obviously eager not to reinvent the wheel with respect to LLM, but the examples above show that overly reliance upon general LLMs is risky.

- Keeping the chat experience simple versus allowing the user to report issues. This includes errors, biased information, irrelevant information, offensive language, and incorrect format. To this end, it is crucial to understand sources and training methods. A good software user experience is helped by a clean user interface. In the context of generative AI, think of the prompt input field in an application. Traditional wisdom suggests keeping this very clean. However, what is the user supposed to do in case of errors or other types of unacceptable AI responses, and how is the user supposed to verify sources and methodologies?

This is linked to the need for “explainable AI”, which refers to the concept of designing and developing AI systems in such a way that their decisions and actions can be easily understood, interpreted, and explained by humans.

The need for explainability has arisen because many advanced machine learning models, especially deep neural networks, are often treated as “black boxes” due to their complexity and the lack of transparency in their decision-making processes.

- Using generative AI for very specific and controlled use cases versus general AI scenarios. One way to potentially curb the risks of AI errors is to frame the use of AI into specific and limited application use cases. One example is a “summarize this” button as part of a specific user experience next to a field with unstructured text. There is a limit to how wrong this can go, as opposed to an all-purpose prompt-based digital assistant.

This is a difficult dilemma simply because of the attractiveness of a general-purpose assistant, which has prompted vendors to announce such general assistants (e.g., Joule from SAP, Einstein Copilot from Salesforce, Oracle Digital Assistant, and the Sage Copilot).

- Charging customers for generative AI value versus wrapping into existing commercial models. GenAI is known to be expensive in terms of compute costs and manpower needed to orchestrate and supervise training. This begs the question of whether such new costs should be rolled over to the customers.

This is a complex dilemma for a number of reasons. Firstly, AI costs are expected to decline over time as this technology matures. Secondly, AI functionality will be embedded into standard software, which is already paid for by customers.

The embedded nature of many AI application use cases will make it very difficult for vendors to change for incremental, separate new AI functions. Mandatory additional AI-related fees related to existing SaaS solutions are likely to be met by strong objections from customers.

- Sharing the risk of Generative AI outputs inaccuracy with customers and partners versus letting customers be fully accountable. Generative AI will be increasingly leveraged in supporting key personas’ decision-making processes in organizations. What if it hallucinated and the outputs were misleading? And what if the consequence is a wrong decision that will have serious negative impact on the client organization? Who is going to take the responsibility for the consequences of those actions? Should customers accept this burden alone, or should the accountability be distributed between vendors, their partners (e.g., LLMs), and end customers?

In any case, vendors should have full transparency of their solutions (including clear procedures regarding training, implementing, monitoring, and measuring the accuracy of generative AI models) to be able to immediately provide required information to the customer in the case of an emergency.

After having taken the enterprise technology space by storm, generative AI is likely to progress slower than initial expectations. As a new technology, GenAI might enter the “phase of disillusionment,” to paraphrase colleagues in the analyst industry.

This slowdown will be driven by a more cautious adoption of AI in enterprise software, as new horror stories instill fear of reputational damage in CEOs across industries. We believe that new generative AI rollouts will have more guardrails, more quality assurance, more iterations, and much better feedback loops compared to earlier experiments.